ChatGPT Memory Isn’t Just Smart - It’s the Blueprint for AI-First Product Thinking

How remembering you like bullet points isn’t a gimmick, it’s the future of personalized software........“Users won’t fall in love with your AI because it’s clever. They’ll stick because it remembers.”

When people talk about ChatGPT, most still treat it like a smarter Google.

But if you're building or working with AI products in 2025, there’s one underrated feature you must get obsessed with: Memory.

In this post, I’m going deep into why ChatGPT’s memory is not just a feature, but a masterclass in product design. (Want the quick take? Jump to TL;DR →)

ChatGPT: The Transactional Trap vs. the Relationship Shift

When ChatGPT launched, it dazzled the world with its ability to write poems, debug code, and summarize articles.

But most users still interact with it like a vending machine:

Prompt in.

Output out.

Forget and repeat.

But OpenAI isn’t just building a better Google.

They’re building a long-term AI companion - one that remembers.

Without memory:

You: "Help me write a twitter post about product."

ChatGPT: Do you want it to be hype, funny, professional, casual…?

With memory:

ChatGPT: "promoting something you've built (like Product Unshipped)”

The difference? Continuity. Recognition. Emotional equity.

Memory = Stickiness

Why do people return to Spotify, Notion, or even Duolingo? Because those tools remember them.

Memory changes ChatGPT from:

Tool → Companion

Session-based bot → Continuity-driven partner

Cold answers → Warm alignment

It’s not about intelligence. It’s about emotional UX.

The Psychology of Recognition: Humans are hardwired to value being remembered. A Harvard study found that people rate service interactions 34% more positively when the provider recalls their name and preferences. ChatGPT’s Memory taps into this primal need—not through gimmicks, but through utility.

How ChatGPT Memory Actually Works (Like a PM System)

Most people assume memory is just "saved text."

That’s only surface-level.

Let’s break it down both from a product lens and a technical architecture standpoint:

Technical View: How Memory Works Behind the Scenes

Storage Structure: ChatGPT stores memory as structured summaries, not raw chat logs. These summaries are contextually scoped and indexed by topic.

Trigger Mechanism: The model learns to extract memorable info based on frequency, explicit feedback, and salience heuristics. For example:

If you say "Remember I’m vegetarian," it's added as a high-confidence entry.

If you discuss a project 4+ times, it may be inferred as a persistent theme.

Memory Update Logic: GPT dynamically updates memory using conversational reinforcement and background rules like:

Merge similar memories

Drop low-salience entries when space runs out

Prioritize emotionally tagged or task-relevant items

Retrieval Pipeline: When you prompt ChatGPT, it loads relevant memory slices before chat context, like a vectorized primer. This helps it shape tone, language, and references accordingly.

Limitations:

Total memory is ~1200-1400 words.

When full, it stops remembering new things until you delete old ones.

You can't yet label or rank memories (but power users simulate this through structured inputs).

Key product insight: Memory is inferred, not always asked. Unless you train it, it trains itself—imperfectly.

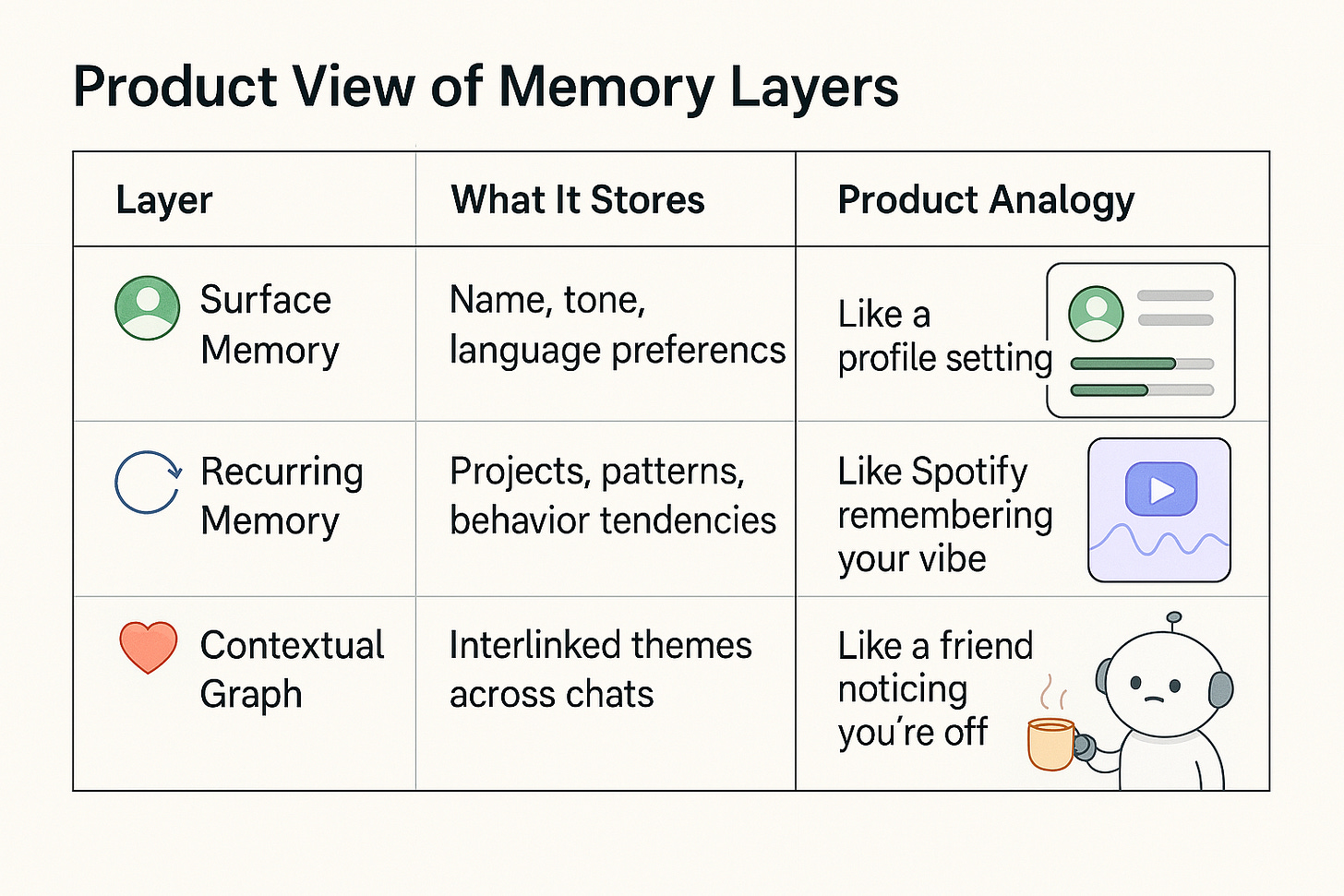

Memory Decoded — The Technical and Emotional Architecture

What Memory Actually Does (Under the Hood)

Memory isn’t just a notepad. It’s a layered system:

Explicit Memory:

You: “Call me Lenny.”

ChatGPT: Stores “User prefers ‘Lenny’” in a structured database.

Implicit Memory:

You: Consistently ask for Python code examples.

ChatGPT: Learns to default to Python without being told.

Contextual Memory:

You: Discuss a project about sustainable fashion for three sessions.

ChatGPT: Builds a knowledge graph linking “sustainability,” “material innovation,” and “consumer behavior.”

Emotional Memory:

You: “I’m feeling stuck on this problem.”

ChatGPT: Adjusts response tone to be more encouraging.

The Privacy Tightrope

OpenAI walks a delicate line:

Memory is opt-in (default off for enterprises).

Users can view, edit, or wipe memories.

Data is anonymized and not used for model training.

This isn’t just ethical—it’s strategic. A Salesforce report found 62% of users would abandon an AI product that felt “too invasive.” Memory succeeds by being useful without being creepy.

The 7 Unspoken Rules of AI Memory ~ A Product Masterclass

Rule 1: Memory is a Narrative, Not a Database

Bad memory systems feel like stalkers. Good ones feel like biographers.

Example:

Bad: “You ordered a latte yesterday. Want another?”

Good: “Last month you explored pour-over techniques - should we revisit coffee experiments?”

Takeaway: Chain memories into stories. Show progression, not just repetition.

Rule 2: Forgetting is a Feature

The ability to erase is what makes memory safe.

Pattern:

Let users reset memories by topic (“Delete all coding-related data”).

Auto-expire trivial memories after 30 days (e.g., “User likes emojis in casual chats”).

Rule 3: Memory Requires Forgetting

Ironically, great memory systems need selective amnesia.

Technical Insight: ChatGPT uses attention filtering - prioritizing memories that:

Recur across sessions

Trigger emotional valence (e.g., user frustration)

Align with stated goals

Rule 4: Anticipate, Don’t Assume

Memory shouldn’t feel like a script.

UX Principle:

Use memories to suggest (“Last time you preferred bullet points - use them again?”)

Never use memories to presume (“Since you’re a beginner…” when the user is an expert)

Rule 5: Layer Memories Like an Onion

Surface Layer: Name, tone, format preferences

Middle Layer: Recurring projects, goals

Core Layer: Values, communication taboos

Peel layers slowly as trust builds.

Rule 6: Make Memory a Collaboration

Bad: “I remember you like startups.”

Good: “I noticed you often discuss lean methodologies—should I prioritize those frameworks?”

Pro Tip: Let users label memories (“Save as part of my UX design principles”).

Rule 7: Memory Demands Responsible Fading

Not all memories should last forever.

Auto-Archive Rules:

Unreferenced for 90 days → Move to cold storage

Contradicted by new inputs → Flag for review

Associated with negative feedback → Deprioritize

The Future of Memory - Beyond ChatGPT

Horizon 1: Cross-Platform Memory

Imagine:

ChatGPT remembers your Slack convos (with permission)

Your project management AI knows your email priorities

Key Challenge: Standardizing memory schemas across apps.

Horizon 2: Emotional Intelligence

Detect stress cues (shorter sentences, typos) → Soften tone

Recognize celebration moments → Add celebratory emojis

Horizon 3: Collaborative Memory

Teams training shared AI memories (“Our brand voice guidelines”)

Families creating memory pools for elderly care AIs

Conclusion: The End of Blank Slates

In 1994, Wired proclaimed: “The best interfaces get out of the way.” In 2024, the new mantra is: “The best interfaces remember their way.”

ChatGPT’s Memory isn’t just a feature - it’s a declaration that AI’s future lies in relationships, not transactions. For product leaders, the lesson is clear: Users will abandon tools that treat every interaction like a first date.

The next billion-dollar AI product won’t be the smartest. It’ll be the one that remembers.

TL;DR: Why ChatGPT Memory Changes the Game for AI Product Thinking

Memory = Stickiness: AI that remembers > AI that answers. It’s the secret to continuity, emotional UX, and user trust.

Treat Memory Like a Wiki, Not a Diary: Curate it. Clean it. Collaborate with it. Don’t expect it to self-organize magically.

Prompt Like a Product Manager: Use memory-aware prompts to activate context. Think: "Use my writing tone from last week."

Build Memory Into Your Own Tools: Let users review, label, and edit AI memory. Design for trust and transparency, not control.

Don’t Just Save—Synthesize: Memory is valuable only when it leads to better suggestions. Store less, learn more.

The Future Is Familiarity: The next great AI tool won’t feel like a blank slate. It’ll feel like something that knows you—quietly, respectfully, intelligently.