What Everyone Misses About LLM Adoption

The first technology to give individuals superpowers before institutions could catch up—and what that inversion means for the future of product, power, and pace.

Last month, I watched my cousin, who lives in Sonipat, Haryana, use ChatGPT to draft her first lesson plan in under five minutes for the students of her tuition center. She’d never written a formal curriculum before, but she is attempting to create a structured plan for students who are appearing for boards.

By the time I messaged her again, she’d already filmed a video tutorial and posted it online, also using ChatGPT to write the script.

Meanwhile, in the same week, a Fortune 500 legal team debated whether to pilot an internal GPT integration. Months of security reviews, compliance gates, and budget sign‑offs later, they’re still in “evaluation mode.”

That contrast isn’t a fluke. It’s the new reality of AI diffusion, and it breaks every rule we thought we knew about how technology spreads.

TL;DR

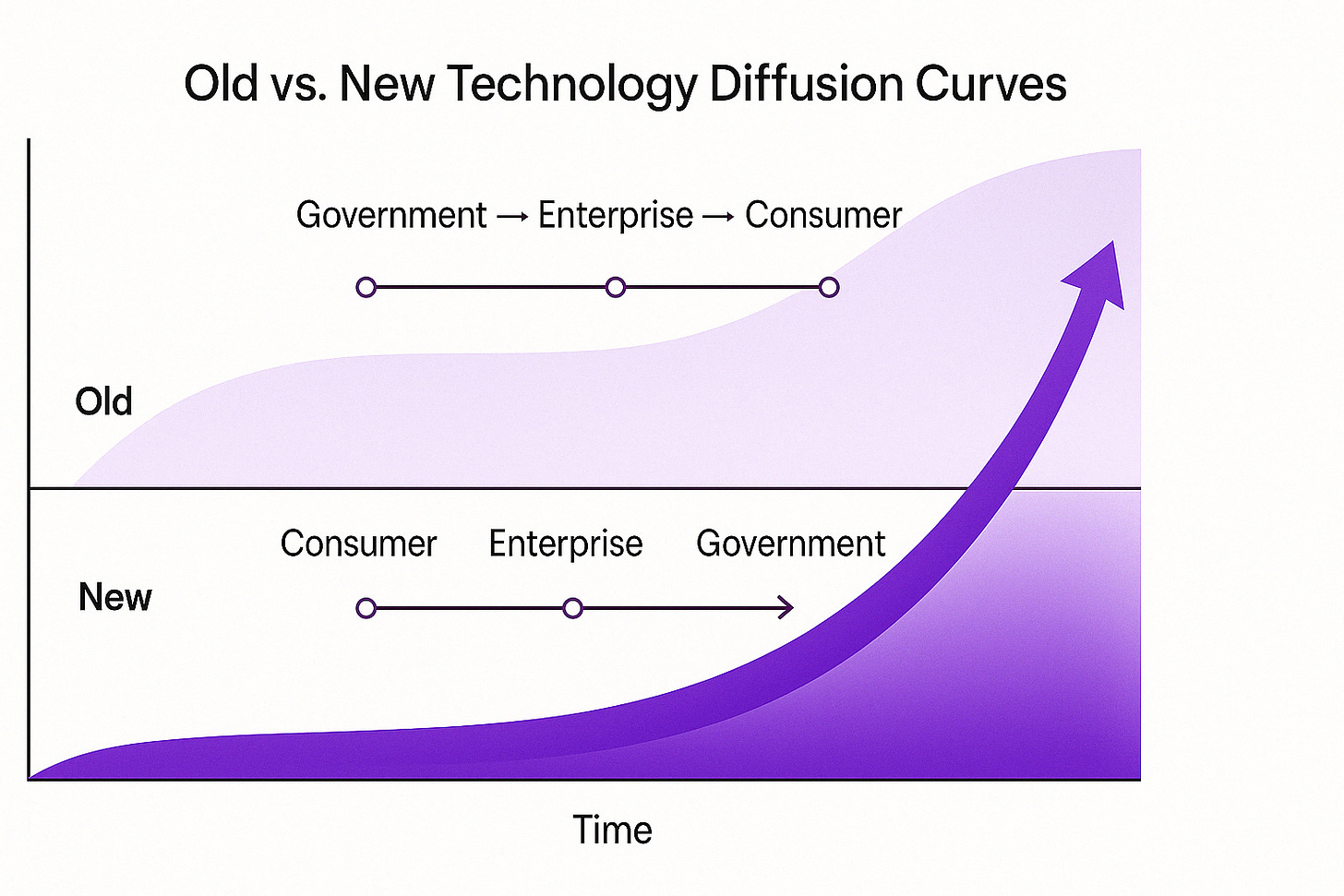

Most technologies follow a top-down adoption curve: from government → corporations → individuals

LLMs broke this pattern. They empower individuals more than they do companies or governments, at least for now

This is due to LLMs’ wide-but-shallow capabilities, low friction, and consumer-first accessibility

Meanwhile, orgs struggle with complexity, coordination, risk, and inertia

The benefits of LLMs could re-concentrate as capital starts to buy better AI

For product builders, this inversion is a once-in-a-generation shift in how technology diffuses

The Standard Pattern: Innovation Moves Top-Down

Historically, powerful technologies have always followed a predictable adoption arc:

Governments invent → Corporates implement → Individuals adopt.

Electricity, cryptography, computers, flight, GPS, even the internet - all of them started in defense or academic labs, moved through corporate R&D, and eventually trickled down into daily life.

Why?

Because most breakthrough technologies begin as:

Expensive to build

Hard to scale

Technical to operate

Risky to use

Limited to those with capital or control

Power, in tech, has always flowed from the top.

And it’s always felt intuitive. Advanced capabilities needed infrastructure, funding, and specialized talent, things that only institutions had.

The Flip: LLMs Didn't Follow the Script

Large Language Models didn’t trickle down.

They burst up.

ChatGPT became the fastest-growing consumer product in history, with 400 million weekly users, without starting in a government lab or an enterprise procurement team.

Instead, it:

Launched in a browser

Was free (or close to it)

Speak your native language

No technical background needed

And worked well enough immediately

It didn’t require power to use. It gave power to anyone who tried it.

LLMs unlocked a wide set of capabilities - writing, coding, summarizing, researching, translating, ideating, and marketing that were previously locked behind years of training or access to specialists.

This wasn’t a productivity boost. It was a capability unlock. And the barrier to entry was basically zero.

Why Regular People Are Feeling It First

LLMs don’t make people better at what they already do. They make them capable of what they couldn’t do at all.

The average person is a specialist in maybe one thing: marketing, writing, teaching, or design. But LLMs can do a little bit of everything.

Code basic apps

Draft contracts

Summarize research papers

Generate data insights

Create ad copy

Build presentations

Translate documents

Answer customer queries

Remix content across formats

Even if the model isn’t perfect, it’s good enough for someone to get started and do things that previously required another expert.

LLMs don’t just extend your skills. They extend your reach.

And for individuals, that’s transformative.

Why Organizations Lag Behind

If this tech is so powerful, why haven’t companies adopted it as fast?

Because companies and governments operate under a completely different set of constraints.

1. LLMs offer broad, but shallow, intelligence

They’re strong generalists, but they hallucinate, lack precision, and can't guarantee correctness.

For a company:

A flawed clause in a contract is a liability

A wrong API response is a production bug

A misleading answer can erode trust at scale

LLMs make orgs slightly more efficient at what they already do. They make individuals able to do things they couldn’t even attempt.

And that’s a much bigger delta.

2. Complexity multiplies inside institutions

Org work isn’t just about output. It’s about fitting that output into a complex, risk-managed, interdependent system:

Brand consistency

Regulatory compliance

Privacy and security

Accessibility

Localization

Infrastructure compatibility

Legal review

Approval flows

Audit trails

You can’t just copy-paste a prompt and ship.

The context window of organizational reality is far bigger than any GPT context window.

3. Inertia is real and human

Most orgs don’t move slowly because of tech limits. They move slowly because of people.

Change threatens roles

Tools require training

Decisions need alignment

Culture needs rewriting

Risk needs a scapegoat

The more distributed the org, the more resistance to change.

Meanwhile, individuals don’t need alignment. They just open a tab and build.

4. A Strange Window of True Equality

Right now, something rare is happening:

Everyone is using the same AI.

No elite tier

No secret access (unless they have built something far more dangerous in their labs but ain’t coming out)

No corporate-grade version

You and Bill Gates talk to the same model. Your child and a government think tank run the same prompts.

This flatness is unprecedented.

But will it last?

5. The Coming Split: When Money Buys Better AI

Right now, you can’t spend money to get better GPT performance. But a few trends are shifting that:

Train-time scaling → Bigger models, better data

Test-time scaling → Longer context, slower evaluation

Model ensembles → Specialized agents in parallel

These push the performance ceiling up.

On the flip side:

Model distillation compresses large models into smaller, cheaper ones that keeps the floor accessible, but narrows the middle.

The moment money can buy significantly better intelligence, the distribution flips again.

And it won’t just be orgs that benefit:

Rich individuals will access better tutors, copilots, and creators

Companies will deploy higher-reliability, agentic systems

The elite will upgrade again—and leave others behind

The equality we see now? It may be a blip.

6. For Builders: What This Inversion Teaches Us

This inversion is a once‑in‑a‑generation opportunity. Here’s your playbook:

🔍 1. Build for Capability Unlock, Not Just Efficiency

Identify what users can’t do today—and use AI to make it possible.

e.g., “Generate MVP code” > “Optimize existing code.”

🎯 2. Design for Generalists, Not Experts

Surface “good enough” defaults.

Guide users through prompts.

Embrace safe failure modes (e.g., “Draft, then review”).

🪜 3. Optimize for Person‑to‑Person Diffusion

Make it easy to share outputs (links, embeds).

Reward creators who publish AI‑powered artifacts.

🔧 4. Support Lightweight Workflows

Copy‑paste code snippets, prompt templates, one‑click integrations.

Avoid heavy SDKs, complex onboarding.

⏳ 5. Move Fast Before the Window Closes

Assume a premium tier emerges in 6–12 months.

Build your moat on distribution and user network effects.

Act now—before capital re‑centralizes power.

Final Thought: This Wasn’t Supposed to Happen

LLMs weren’t supposed to be a free chat widget on your phone. They were meant for labs, government research, and high‑cost corporate pilots.

But here we are.

“The future is already here, it’s just not evenly distributed.” - William Gibson

But right now, it is evenly distributed. And that’s the real miracle.

For product builders, this is your moment to invent, iterate, and inspire on a level playing field. When the next twist comes, when money buys better AI, only the builders who moved fast and this deep will keep the edge.

Let’s build while the power truly lies with the people.

I explain the larger game of A.I. Deception on my podcast here. The point is control. We either turn A.I. to our benefit or we will be consumed like the Borg. Let explain:

https://open.substack.com/pub/soberchristiangentlemanpodcast/p/ai-deception-2025?utm_source=share&utm_medium=android&r=31s3eo