AI Product Management 101: What Every PM Must Master Before It’s Too Late (PART-1)

In the 3 part series of the AI Product Management 101 series that you all requested where I have explored the depth of it from 0 to 1.

Everyone’s talking about AI tools.

PMs posting screenshots of their first Claude-generated PRD.

Tweets about Perplexity as a research assistant.

Teams are throwing "AI" into pitch decks like it’s seasoning.

But here’s the problem:

AI is not just a tool. It’s a paradigm shift.

And unless you understand what that shift is, you’re not managing AI products.

You’re adding AI to products.

There’s a big difference

.Marily Nika said it best on Lenny’s podcast:

PMs must stop treating AI like a shiny feature. It's a fundamental change to how we discover, build, and learn.

This isn’t about prompting harder. Or finding the best model. Or memorizing AI jargon to impress leadership.

This is about product judgment. Product responsibility. Product depth.

This is about how:

Your role as a PM evolves from roadmap planner → system sense-maker

Your product changes from workflow execution → behavior orchestration

Your risk shifts from tech feasibility → probabilistic trust management

So this article won’t teach you how to "use ChatGPT better."

It will teach you how to think like a product manager in the age of AI. Once you understand these shifts, everything—your discovery approach, your specs, your cross-functional rituals—will evolve too.

Because, as Marty Cagan said in his 2024 piece,

The PM role becomes more essential and more difficult with generative AI.

What Makes AI Product Management Fundamentally Different

Most AI product conversations miss the mark:

They jump to prompting hacks,

Claude's artifacts, or

shiny workflows……….without grasping what actually makes AI different to manage.

This section is not a framework dump. It’s a reality check.

Drawing from Marily Nika’s experience building AI at Meta and Google, Marty Cagan’s AI product risk lens, and the deep insights from

’s podcast, let’s rewire our thinking.Because once you understand these shifts, everything in your discovery approach, your specs, and your cross-functional rituals will evolve too.

a) Deterministic Thinking → Probabilistic Thinking

Traditional PMs work in rules and outcomes.

"User does X, system does Y."

Same input, same result.

AI PMs work with probabilities:

"User does X, model might do Y. Or Z. Or hallucinate."

Same input, multiple outcomes.

Marily: You don’t just launch a feature. You manage drift, feedback, and output confidence.

This means you’re no longer writing acceptance criteria. You’re defining tolerable failure rates.

You’re not reviewing features.

You’re reviewing the distribution of behavior.

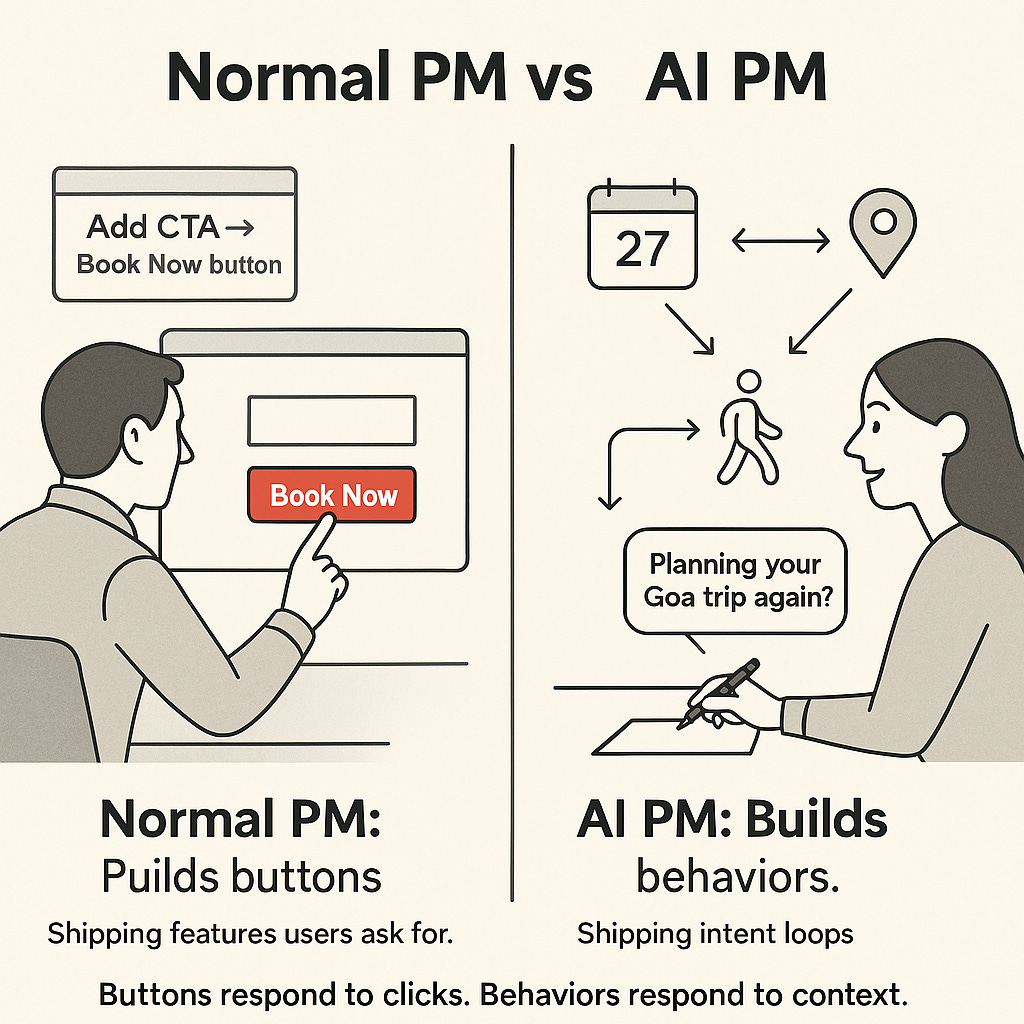

b) Features → Behaviors

AI is not a module. It’s not a toggle. It’s a pattern of behavior that emerges based on model design, data loops, and user context.

You’re not building a recommendation engine. You’re building trust in a probabilistic system.

This is where most PMs fail. They ship something with GPT-4 under the hood, and expect delight.

What they get is confusion.

Or worse, false confidence.

Marty: PMs must define how AI will behave, not just what it will do.

c) Roadmaps → Feedback Loops

When you launch a traditional product feature, you track adoption, engagement, and drop-offs.

When you launch an AI-powered feature, the story's just beginning.

The model learns. Users adapt. And the output can subtly shift, without a single line of code being touched.

So, as an AI PM, your roadmap isn’t just a build plan. It’s a monitoring and retraining ritual.

Your job becomes:

Watching a model drift in the wild

Feeding user interactions back into the system as training data

Defining when and how to re-tune the behavior

Lenny: PMs are used to planning. AI work is uncertain. You’re running experiments that may or may not work 6 months in.

And that’s why discovery doesn’t get easier with AI.

It becomes messier, loopier, more alive.

This is not a Gantt chart. This is a living organism. And you’re responsible for keeping it healthy.

d) Constraints → Trade-Offs

Every AI model drags a cost ledger behind it. It's never a clean win.

Every capability comes with a consequence.

Want higher accuracy? You’ll pay in latency.

Want user trust? You’ll need transparency, but that makes the model harder to build.

Want personalization? Get ready to justify data collection.

These aren’t just technical trade-offs. Their product decisions with long-term business, ethical, and user experience consequences.

Marily: Most PMs forget they’re the ones responsible for defining what 'good enough' means for a model.

And that’s the hard truth: there is no objectively best model behavior.

There’s only behavior that aligns with your product’s purpose and your user’s expectations. You have to ask:

What’s the real-world cost of a model making the wrong call here?

If this model serves 10 million people tomorrow, who gets left out or worse, harmed?

Would you trust this system if it recommended a treatment to your parent? A loan to your friend?

When does the AI need to say "we're not sure" instead of pretending certainty?

Is the model performance good enough to solve the job, or just good enough to ship a press release?

If you don’t wrestle with these trade-offs yourself, someone else will. Usually by accident.

The 4 Product Risks Every AI PM Must Own (And Why Most PMs Miss Them)

Let me tell you where most AI PM advice goes wrong: It focuses too much on the model and too little on the product.

You’re not here to debug neural networks. You’re here to manage risk, behavior, and user outcomes.

Marty Cagan lays it out clearly: every product has four types of risk. But with AI, each of these risks gets amplified, tangled, and harder to spot.

Here’s how each one plays out in AI:

Feasibility Risk → “Can the model even do this reliably?”

In traditional PM, this is an engineering call. But in AI PM? It’s a messy blend of:

Model performance

Training data quality

Real-world edge cases

You’re not just asking “can we build it?” You’re asking:

“Do we have the right data to teach the model?”

“Can we collect more data without compromising trust or legality?”

“Will this work well enough for the people we care about most?”

Lenny: “PMs must work comfortably with uncertainty—and still lead. In AI, that means making product bets even when feasibility is murky.”

Think like this:

Great AI PMs partner early with ML scientists to model reality—not fantasy.

They pressure test the model like a user would, not like an engineer would.

Value Risk → “Will this solve a real problem better than what exists?”

AI is a solution, not a problem. But many teams forget that.

They slap on a GPT wrapper and call it innovation. But the question is:

Does this AI-driven thing create net new value? Or just novelty?

Marty: “PMs must ensure AI is used to solve real problems, not just chase shiny objects.”

You should be ruthless here:

What’s the delta vs. a rule-based system?

If AI fails, can the product still deliver value?

Is the user problem so big that AI-level complexity is justified?

Great PMs use AI like spice—not the main course. They frame the before/after journey like this:

Problem → Manual process with low trust

Insight → AI can triage 80% cases with high confidence

Application → User regains time + trust without giving up controlUsability Risk → “Can users trust, understand, and adopt this?”

Let’s be honest: Even the smartest AI breaks if users don’t understand it.

PMs now need to design not just for usability, but for cognitive comfort and model transparency.

Marily: “Users need to know what the AI can and can’t do. Otherwise they lose trust fast.”

The best PMs obsess over:

Explainability: Why did the AI suggest this?

Boundaries: What happens when it fails?

Calibration: Does the UI reflect how confident the model really is?

If your AI feature fails silently or unpredictably, you’ll lose the user forever. Even if your model is technically “working.”

Viability Risk → “Can we sustain this—ethically, legally, financially?”

The model isn’t your product. The infrastructure behind it is. And many PMs forget that.

Can you afford to run this at scale? Are you collecting and storing user data ethically? Are you prepared for edge cases that make the news?

Marty: “AI PMs must own viability deeply—infra cost, compliance, privacy, risk. It’s non-negotiable.”

You can’t ship an AI product without:

Costing out your inference pipeline

Forecasting latency spikes and fallbacks

Partnering with legal on GDPR, IP, and consent

Knowing when hallucinations cross the line

This isn’t the sexy part. But it’s the make-or-break.

AI magnifies every traditional PM risk across feasibility, value, usability, and viability. Bad PMs delegate those risks. Good PMs document them. Great AI PMs design the entire product around mitigating them.

What Great AI PMs Actually Do Differently

This is the part nobody tells you—but it will kill your product if you miss it.

We’ve covered the theory, the mental shifts, and the risks. Now let’s talk execution. Not hypotheticals. Not frameworks. But what great AI PMs are doing differently in the wild?

Because managing AI isn’t about being more technical. It’s about being more product-aware in a probabilistic world.

Here’s what separates those building AI that lasts from those building AI that demos well:

They obsess over observability, not just performance

Anyone can measure accuracy. Great PMs measure confidence decay.

They track:

How often does the model get it wrong and no one notices

Where user behavior changes post-AI launch

How output quality shifts as data freshness fades

Insight → The worst bugs in AI aren’t crashes. They’re quiet misfires that break trust slowly.

They build dashboards that look like this:

Model Confidence Heatmap → By segment, time, intent

Silent Fail Rate Tracker → % mismatches caught via support tickets

Drift Monitoring → Feature distribution shift from training dataThis isn't a metrics culture. It’s a vigilance culture. Because AI doesn’t fail like software. It degrades.

They prioritize controllability over intelligence

Let’s be real: a 95% accurate model with no override = useless in production.

Great PMs ask:

Can users steer the system when it feels wrong?

Is there a fallback when confidence is low?

Can support, ops, or editors intervene without retraining?

PMing AI is like managing a brilliant but unpredictable teammate. You don’t just admire them you give them a process.

Great AI UX feels like collaboration, not delegation.

They test with dissonance, not delight

Most PMs test for delight: “Did the user smile?” Great AI PMs test for dissonance: “Did the user trust this?”

That means testing:

When the AI gives a technically accurate but humanly bizarre answer

When the AI disagrees with the user’s expectation

When the same task returns different results across time/users

And asking:

“How does this output make the user feel—confident, confused, or dismissed?”

They don’t just watch usability tests. They sit in support queues, review downvotes, and read the rage in user comments.

They co-design with the model team from Day 0

PMs used to throw specs over the wall to ML. But AI PMs don’t wait. They:

Include researchers in discovery

Frame product goals in terms of task ambiguity, not just features

Prototype model scaffolding early (label taxonomies, input formats, thresholds)

Marily: If you want a model to behave well, start with good product instincts not perfect data.

They don’t ask “What can the model do?” They define, “What behavior do we want it to learn?”

Builder’s Checklist: Before You Ship AI

Here’s what should be in your head before you release anything with an ML model inside:

- Do we know the *worst-case* output this system could produce?

- Have we stress-tested the model against edge intents, accents, sarcasm, or cultural bias?

- Do we expose confidence to the user? Should we?

- What’s the plan when it fails: user fallback, model fallback, or human-in-the-loop?

- Can our infra handle scale without latency spikes?

- Who’s accountable for mistakes—us, the vendor, or the model?If you don’t know these answers, you’re not launching a product. You’re launching a liability.

Up next → Part 2: The AI PM Operating System

We’ve laid the foundation: how AI changes product thinking, risk, and behavior.

In Part 2, we get tactical:

Tooling ≠ Thinking: The real mindset behind effective prompting

Top 5 workflows where AI 10x-es your speed as a PM

The biggest AI PM traps (and how to dodge them)

How to learn AI without a PhD—or a fake credential stack

Why PMs won’t be replaced by AI—but the lazy ones will

Final notes: AI isn’t a trend, it’s a default. You don’t have to build it, but you do have to understand it.

Plus: curated resources, playbooks, and dashboards that serious PMs are using to level up in the new era.

This isn’t AI for hype. This is AI for shipping.